E-Café

I hope to post a link to a doc file so this can be downloaded, but until I have a place to put the file, you can email me and I'll send it to as an attachment.

All images can be viewed full size by right clicking on them and selecting "View in new tab/window."

Red: An assumption that needs to be looked into.

Blue: Future feature. Nice to have, but not a version one necessity.

Green: Recent changes

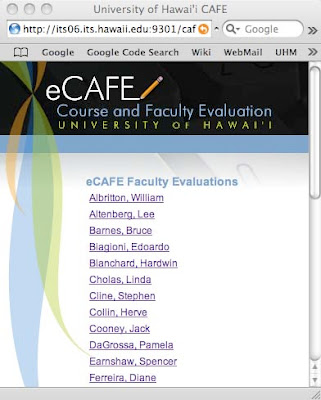

The site can be accessed by instructors, students, and designated staff members (ex: department secretary) by logging in to www.hawaii.edu/ecafe.

Index.html:

When the user goes to the eCAFE site, they will see the index.html page which will give a brief explanation of the system and a form through which the user logs in. Once the user logs in, their permissions will be checked, and they will be sent to the appropriate page for their role or roles (instructor, staff, student).

The time of year changes what users will see. For example, staff can edit surveys in the period consisting of roughly the first quarter of the semester. Outside of that time period, the editing functions will not appear. This applies to all user roles in various ways.

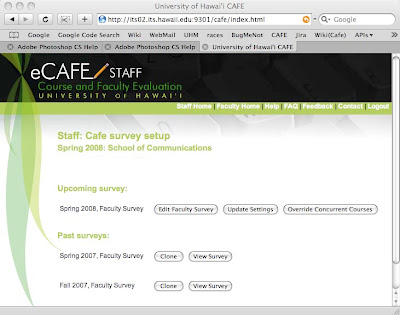

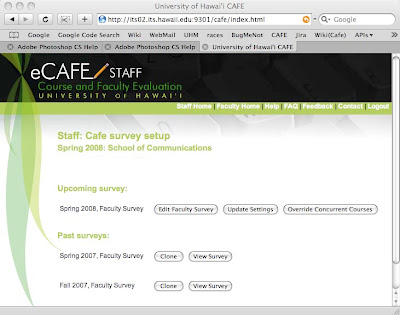

Staff: (Staff.html)

Upon logging in, the staff member sees any past surveys and a single current-semester campus, college, division, or department survey. Note: I will use the word organization to mean any of campus, college, division, or department hereafter.

From here, the user decides if they want to edit the survey for this semester, view current or past surveys, input the instructor-specific settings, or override the instructors for concurrent courses.

Survey questions/options are editable by the staff for the first ¼ of the semester. During this time, the edit, update, override, and clone buttons are available. Outside of this time period, staff are limited only to viewing the questions that appear(ed) on all past and current surveys.

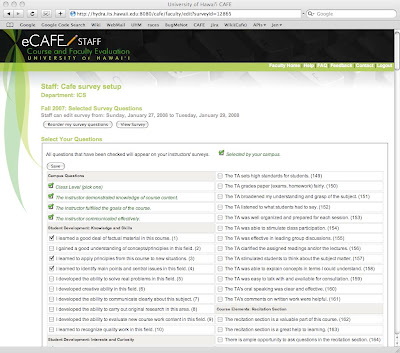

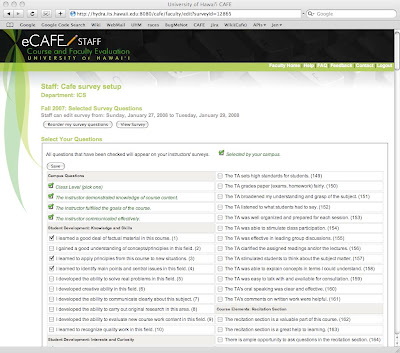

Upon selecting the “Edit Instructor Survey” button, the user is taken to a page that shows them a list of questions they can select from. This page also shows which questions all higher organizations (campus, college, etc) have already selected, they are placed in a separate section and highlighted in green.

To select the questions for their own organization, the staff member checks the boxes next to the questions they want and clicks “Save.” With this action, the user is setting which questions are going to appear on the surveys of all instructors in their organization and the organizations under them.

For example, if the designated staff member for the Manoa campus selects the question “What is your overall rating of this Course?”, while the designated staff member for the College of Arts & Sciences selects “Which aspects of this course were most valuable?”, and the staff member of the ICS department selects “Would you recommend this course to others?” All three questions will appear on the survey of an ICS instructor, while an instructor in the Art department would only get the campus and college questions.

Note that cross-listed courses are a special case. Since a cross-listed course is under multiple departments, the students will see a survey containing questions from all involved departments and their associated colleges and divisions.

Eventually, there will be a means to create a survey specific for TA’s, but for now, each organization is limited to creating only one set of survey questions, which all instructors under them will see on their surveys.

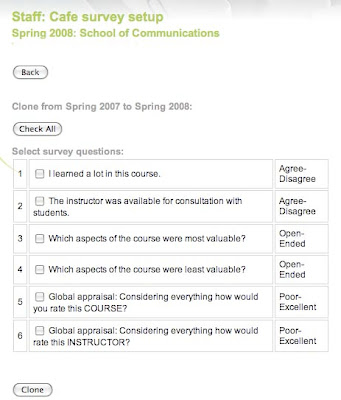

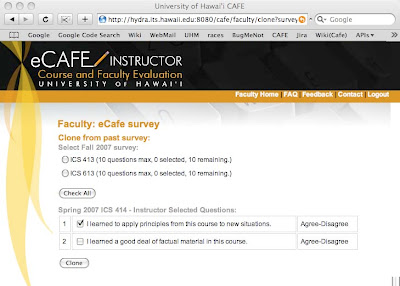

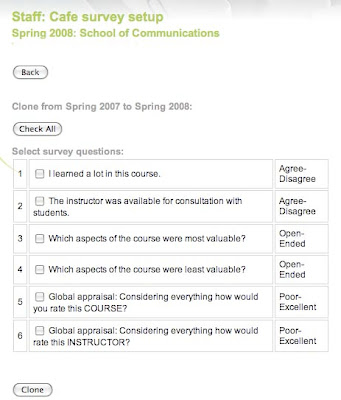

Staff, Clone.html

In setting up the current semester’s survey, the staff member can choose to clone the questions from a past survey. Next to each past survey is a button labelled “clone”. This button takes the user to a page showing all questions from that survey. There is a checkbox next to each question. The staff member can select all or some of these questions and click “Clone” which will cause the selected questions to be copied to the current semester’s survey.

Note that although this action copies the questions from a previous survey, the user can choose to edit the current set of questions after cloning. This means the user can copy questions from a past survey and then go to the edit page to add new ones to the copied set. The newly cloned questions will show up as being already selected. Also, the user can choose to clone questions from multiple past surveys. The system will automatically remove duplicate selections so a question isn’t repeated.

Staff, View.html:

Staff, View.html:

To see questions that were on past surveys. The staff member clicks on the “View Survey” button on the main page. This shows the user the survey appearing exactly as the students see it, sans “submit” button. The only other action allowed on the view page is through the clone button which will take the user to the same clone page described above.

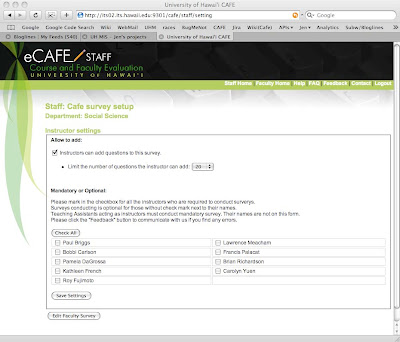

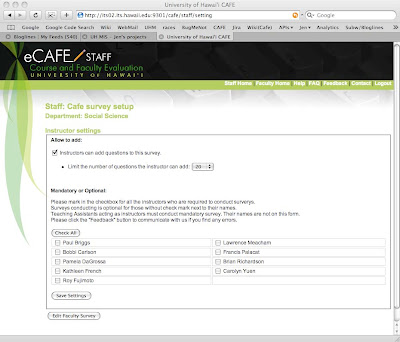

Staff, Settings.html:

The Staff member must also choose whether or not to set restrictions on what the instructors can do with their surveys. Some departments want the surveys taken unaltered, some allow staff to add questions. If they allow the instructors to add their own questions, they can choose to limit the number the instructors can include.

The user can affect these options by clicking on the “Update Settings” button on the main page. They are taken to the settings page shown here. At the top of this page is a checkbox allowing the user to set if their instructors are allowed to add their own questions to the survey in addition to the ones set by the organizations. In addition, if instructors can add questions, the staff member can place a limit on the number of questions the instructor may include.

The lower half of this page is where the instructors are set to mandatory or optional. An unchecked box means the instructor is optional. This means that the instructor will have a choice of whether or not to present their surveys to their students. What this means for the instructor will be discussed in detail in the instructor section of this document. A checked box means that the instructor is mandatory and has no choice in regards to the students receiving the survey. The system will present it to students automatically. Note that x99 courses are always exempt from mandatory surveys. Even if a instructor is selected as having a mandatory survey, any x99 course they teach will be exempt.

Any person teaching a course under that organization will appear in this list of instructors, even if the instructor is technically not employed by that organization. This means that a single instructor can appear in the lists of multiple organizations. This also means that if a course is crosslisted, the instructor teaching the course will show up in all departments for that course. For example, if a course is crosslisted as W.S. and HIST, both the W.S. and HIST department staff members will see that instructor on their settings list.

Since both departments settings will apply to the same instructor, and those setting may conflict, we are adding a page where the departments can indicate which department is “primary” for a given course. On the page, the staff member will see a list of all courses crosslisted with their department. Next to each course is a checkbox “This department is the primary funder of this course.” Whichever department checks that box is considered primary, and their settings will apply to that instructor for that crosslisted course.

Once set, all other departments for that crosslisted course will see, instead of a checkbox, a label stating “Department ‘X’ is the primary for this course, if this is in error, please contact …”

For example, Psychology 385 (Consumer Behavior) is crosslisted with Marketing 311. If the Staff member for Psychology logs in before Marketing and sets PSY to be primary for course 385, then the Staff member for Marketing will see "Psychology is the primary department for this course." If this is incorrect, the Marketing Staff member will need to contact Psychology to work things out.

Since Psychology is the primary, when the instructor logs in, they will get whatever settings were placed by that department. So if Psychology set all instructors to optional and limited them to 5 questions, while Marketing set all instructors to mandatory with a limit of 10 questions, then the instructor will be optional with a 5 question limit.

If no one sets their department to be the primary, then the instructor will get the most restrictive settings of the crosslisted department. So in the above scenario, the instructor will be mandatory and be limited to 5 questions for that course.

TODO: What happens at the different organizational levels? It should never happen that the college and the department will both be messing around with settings, but it might. If the College of Arts and Sciences sets all instructors as mandatory, but the art department sets them as optional, whose settings should be the one? I’m assuming the department, but is that correct?

TODO: Each organization’s staff member needs to see the contact information of the staff members above and below them (if any) in case of disputes.

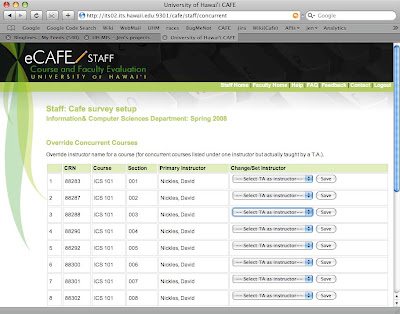

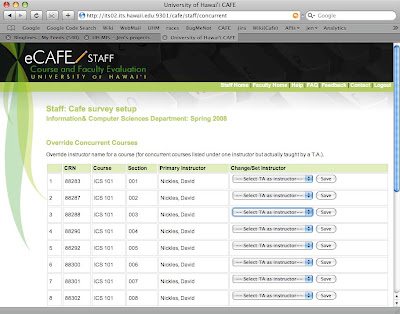

Staff, Concurrent.html:

The final action available to a Staff member is through the “Override concurrent courses” button. This takes the user to a page where they can change the instructor of a concurrent course.

Concurrent courses are those which are multiple sections of the same course listed under the same instructor where the individual sections are actually taught by a TA. On this page, the staff member can select from a list of TAs so that the survey will be for the actual instructor (the TA) rather than the listed one.

Instructor

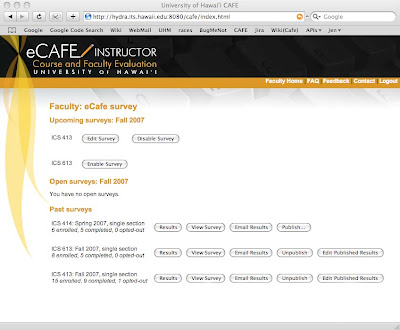

Instructor: (Instructor.html)

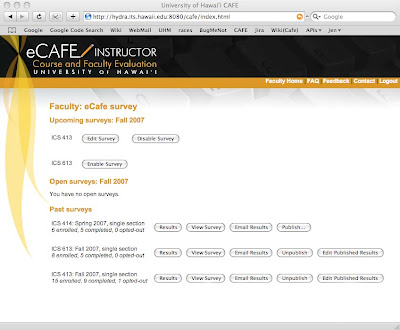

When instructors log in, they are taken to their main page. From this page, they can choose to edit, view, enable/disable upcoming surveys, view open surveys, and see the results of past surveys.

Instructor, upcoming surveys:

Instructor, upcoming surveys:

Upcoming surveys are those which have not yet opened for students to take. They are in the editing stage. The instructor can edit their surveys for the 2/4ths of the semester following the staff’s editing period. After that, no further changes are permitted.

When an instructor with upcoming surveys logs in, they will see each of their courses listed. The buttons they see next to those courses varies depending on the setting provided by staff members.

If the instructor is allowed to add questions to their survey, they will see an “Edit Survey” button which takes them to the edit page (discussed below). If the instructor is not permitted to add questions, they will see a “View Survey” button instead. The view button show them the survey exactly as the students will see it, minus the “Submit” button. They will not be able to make any changes to it.

When an instructor has an optional setting, meaning they have the choice of whether or not to give the survey to their students, then they see an additional button, either “Enable” or “Disable.” Instructors who are mandatory will not see these buttons. All surveys are enabled by default, so optional instructors will initially see an “Disable” button next to each course’s survey. If the instructor does not wish to give the survey, they have to select the disable button. Once disabled, the Disable button will be replaced by an “Enable” button should the instructor change their mind. In short, all optional instructors are opted-in by default and must opt-out if they do not wish to participate. If they are willing to participate, no action is required.

TODO: Should we give organizations the ability to set whether optional instructors are opted-in or out as their default setting? Or should everyone be opted in as I wrote?

Instructor, open surveys:

Open surveys are those which are available for students to fill out. Once open to responses, the instructors will only see a “View Survey” button which takes them to an uneditable view of the survey. Next to each open survey on the main page is a count of how many students are in the class and how many of those students have taken the survey. This shows only the survey, not any of the results being collected.

Instructor, completed surveys:

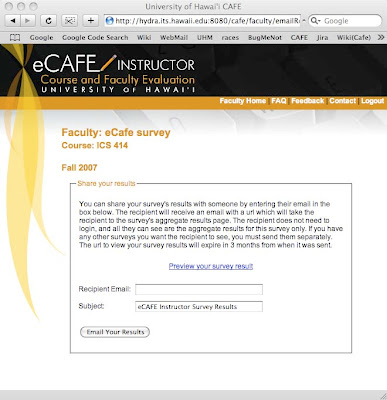

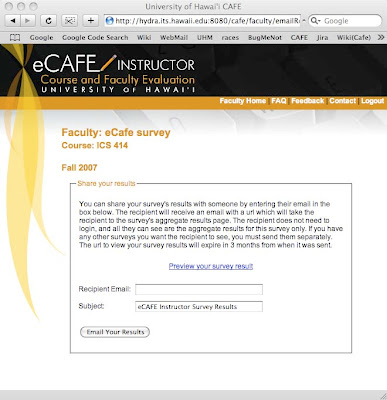

These are surveys that happened in the past. They are no longer open for students to fill out. Instructors can choose to view the results, see the survey (uneditable view), email their results to someone, save as pdf, publish/unpublish the results, and clone the survey to another survey. The image of the instructor’s main page is missing two buttons, there should be one labelled “Clone” next to each of the completed surveys, as well as a “Save to PDF” button.

The “View Survey” button for completed surveys has the same effect as it did for upcoming or open surveys, it shows the user an uneditable view of the survey looking exactly like the students saw it, sans Submit button.

The button “Email Results” takes the user to a form through which they can send an email containing a link to their summarized results (discussed in results section later). The link will allow the recipient(s) to see the aggregate results only, not the individual surveys. The recipient will not have to login to see the results, so no account is needed.

“Save to PDF” automatically downloads your aggregate results to your computer in PDF format. The file is named

- .pdf, so ICS 414 in the Fall 2007 semester will result in a file titled “ICS 414-Fall 2007.pdf.”

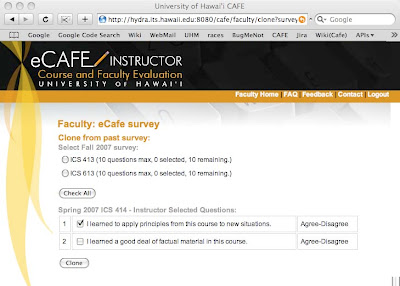

Instructor, Clone Survey:

Instructors can choose to copy their questions from any past survey into the current semester’s survey.

On the main page, there is a “Clone” button next to each past survey. Clicking that button takes the user to a page showing all their personally selected questions from that survey. This list will not include questions set by organizations.

The instructor can select any or all questions, indicate which course’s survey(s) they want to copy the questions into, and click “Clone.” All checked questions will be copied into the other surveys. Should the user want to make further changes to the upcoming surveys, they can edit them via the Edit button on the main page, as described earlier.

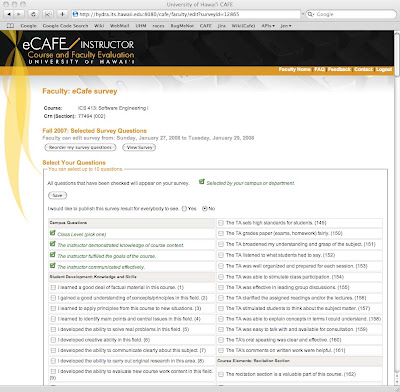

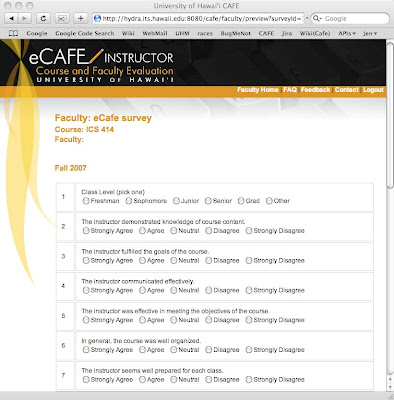

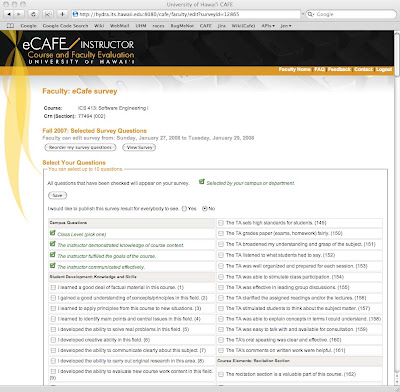

Instructor, Edit survey:

Clicking the Edit button (if provided) next to an upcoming survey on the main page, takes instructors to a page where they can add and remove questions to their survey.

The data shown at the top of the page include the following: campus, department, CRN, course name(s), section number(s), instructor name, semester, year, and survey open/close dates. If there are multiple sections of a course assigned to a given instructor, all of them are given the same survey, so the instructor will see multiple courses and sections here in that instance.

This will be followed by a set of questions they can select from in order to set up their survey. Any questions set by their organization or others above them will be displayed as already selected. To add questions to their survey, the instructor checks the boxes next to the questions they want and clicks the “Save” button. If they decide to remove a previously selected question, they uncheck its box and save. While instructors can remove questions that they themselves have selected, they cannot remove questions selected by any organizations.

The previous version of eCAFE had two instructor features that will not be included in the new one: the ability to create their own questions, and the ability to set the order of those questions. See the links on those topics for the reasons why.

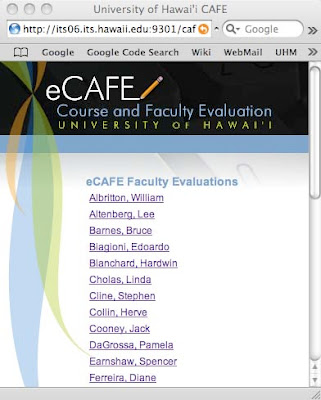

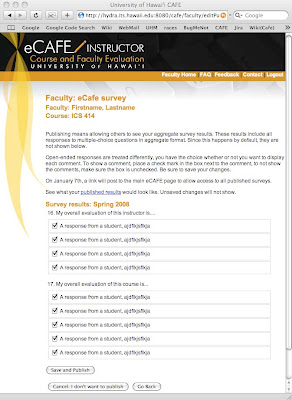

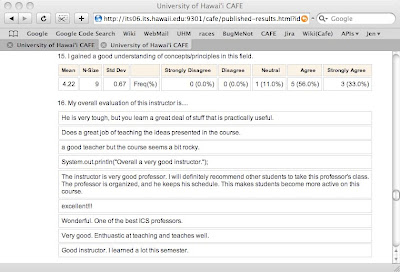

Instructor, Publish/Unpublish/Edit Published:

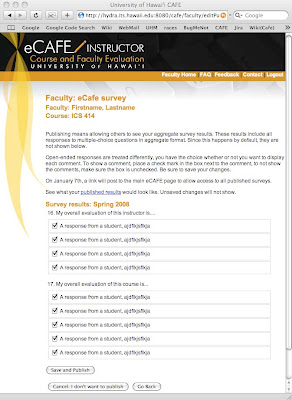

Publishing results means the instructor agrees to have the aggregate results of the survey posted for all to see. Two weeks after the instructors get their results, anyone will be able to go to the published results page and see the names of all instructors who published their results. Clicking on a name will show all published survey results for that instructor. The multiple-choice questions are aggegated, no one but the instructor ever sees the individual survey responses. Open ended question responses are included, too, at the instructor’s discretion.

When an instructor clicks the “Publish” button, they see a page with some explanatory text, and a list of all open-ended responses to their survey questions.

Aggredized responses to multiple choice questions are included in published results by default, but since students sometimes include questionable material in their answers, we allow instructors to select which open-ended responses they will/won’t show.

Each open-ended question is displayed along with a list of the students’ responses, and there is a checkbox next to each response. The instructor unchecks the boxes of the responses they do not want to have displayed, and clicks “Save and Publish.” At that point, the instructor’s name is added to the page of all published instructors.

While instructors can decide not to show some of the open-ended responses, a note will appear next to the results stating something to the effect of “showing x out of y responses,” so there is a disincentive to show only positive responses.

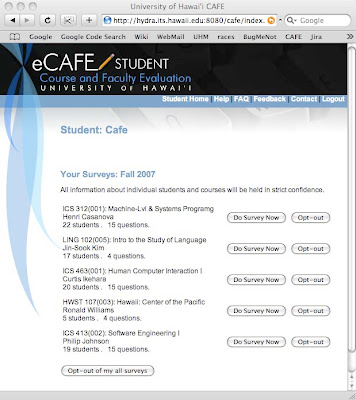

Student: (Student.html)

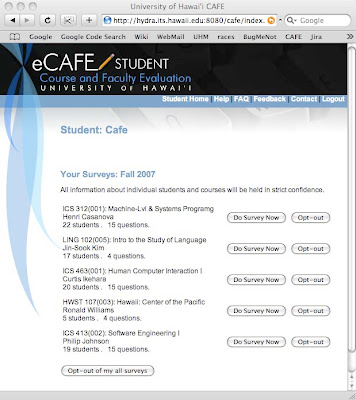

Students can fill out the surveys starting three weeks prior to finals and ending on the Friday before finals.

At the start of this period, all students who are registered for courses that are giving surveys are sent an email. These emails will repeat each week of the open period until the student either completes all their surveys, or opts out. Note that there is no method for the student to opt-out of receiving the eCAFE notices during this period, although they may just end up putting us in their spam filter.

If the student logs into eCAFE outside of this period, they are shown a message stating that eCAFE is not open for surveys and shows the dates that it will be or was open.

When a student logs in during this period, we show them the student.html page regardless of whether or not they have any surveys. If there are no surveys, we show them a message to that effect.

If they do have unfinished surveys, they can do any of the following: complete the surveys, opt-out of specific surveys, or opt-out of all their surveys. Should they choose one of the opt-out options, they will have to respond to a prompt before the action is put into effect.

Opt-out means that they choose not to do the survey. If selected, they will no longer receive nag-notices. For the survey’s records, we mark the survey as being opted-out so it doesn’t factor in to completion rate statistics.

Classes with three or fewer students will result in the survey not being given as there are not enough students in the class to provide a reasonable expectation of anonymity.

If a student does have surveys to complete, and selects the “Do Survey” button, they are taken to the survey.html page.

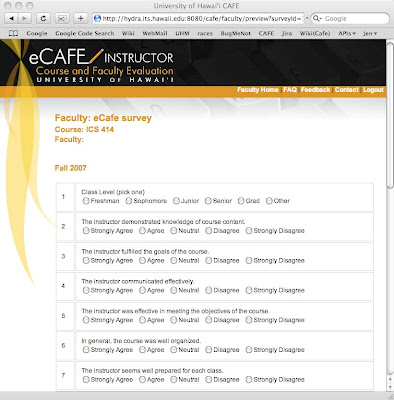

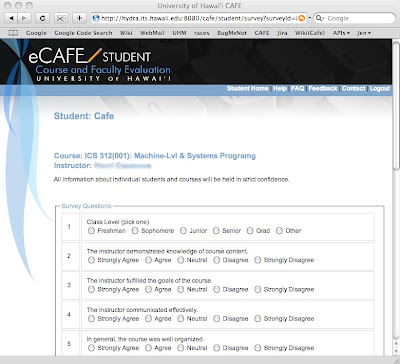

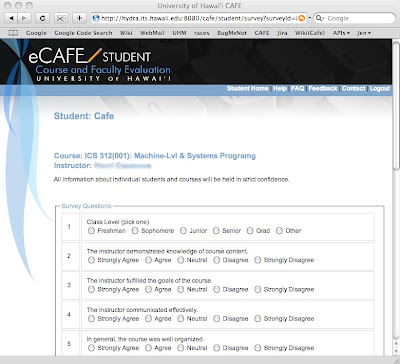

Student, Survey.html:

This is where students fill out their surveys and submit them. The page shows the course and instructor data, followed by the questions.

Cancel will prompt the user, telling them any questions they have filled out will be lost. Assuming the user says ok, the survey is abandoned, its status unchanged, and the student is returned to the student.html page where the survey still shows up, complete with “Do Survey” and “Opt-out” buttons.

The submit button causes the survey to be stored in the database. Clicking submit will also cause the survey to be marked as completed on the student’s record. They will then be returned to the student.html page where the just-finished survey will be marked as completed, and will no longer have any buttons next to it. Surveys are final, once submitted, they cannot be retaken.

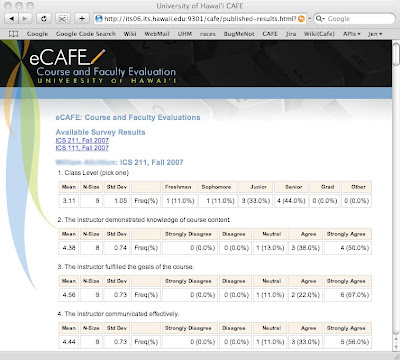

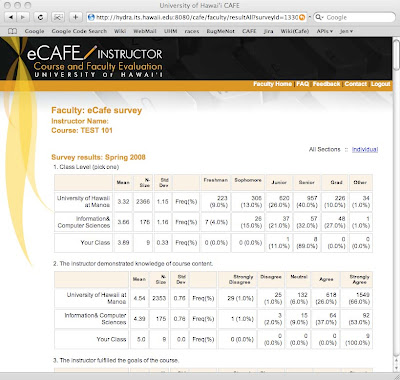

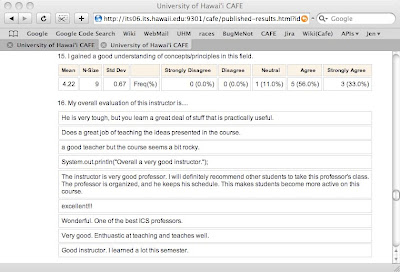

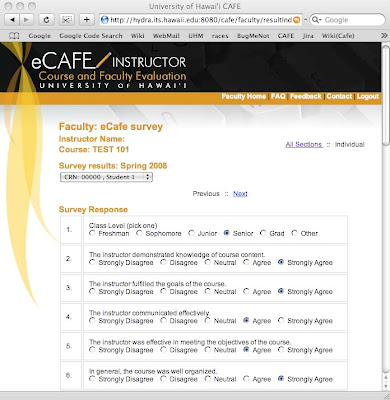

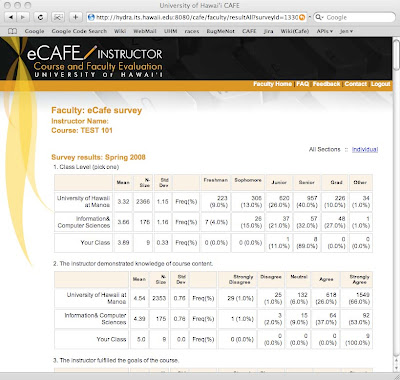

Instructor, Survey Results:

This is where instructors view their students’ responses to a survey. This data will not be available until the day after grades are due, at which point, instructors will receive an email reminder telling them the results are ready for viewing.

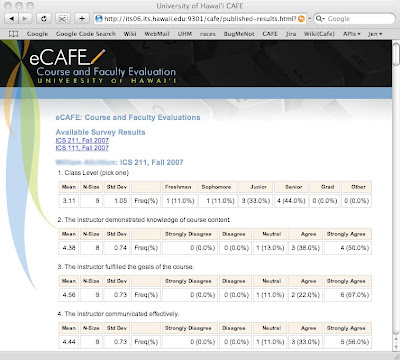

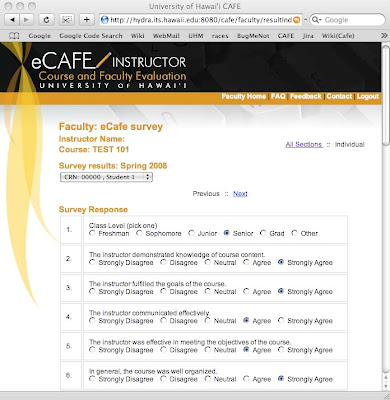

When they first click the “View Results” button of the main page, the instructor will see an aggregate view of all their results.

For each multiple-choice question, it will show the number of students who answered, the number of students who selected each possible answer, the percentage of respondants who selected each possible answer, the mean, the standard deviation, and a comparison between their results and those of everyone in their department or campus who asked the same question. If no one else asked the same question, then the three rows of campus, department, and class will all have the same data. Open ended responses are shown in a list format.

From the aggregate view, there is a means to see the sets of responses given by each student, similar to having a stack of paper survey responses to flip through. By clicking on the “Individual” link, the instructor will see all responses of one of their students, and can see the others by using a drop-down menu or clicking on the “previous” and “next” links.

Manager (manager.html):

A Manager is someone who is specified by their organization to have the ability to see the results of all users who have their surveys set to mandatory. There can be multiple people with this permission and one of them is likely to be the organization secretary.

Means of viewing results by non-instructors:

There are a number of ways that others will be able to see the aggregate results of an instructor’s surveys.

1.) If the instructor was set as mandatory for their organization, then their survey(s) will be available to specific people pre-determined by the department. In this case, the instructor will see a list of who will have access to their results on both the setup and results pages, so they can protest if this is in error. Viewing of results through this manner is limited to people with the Manager role.

2.) If the instructor emails them a link to the survey. Any recipient of the email can see these results, no account or login is necessary.

3.) If the instructor has granted them permission to view their results. This feature needs more definition, but the idea is that the instructor will be able to enter the UH usernames of individuals who have ongoing access to their aggregate results. While this permission can be revoked by the instructor at any time, the intent is that they can set who is allowed to see their results, and have that permission in effect for ongoing semesters. The viewer must login to eCAFE in order to see the results.

Misc Future Features:

- Add an open-ended question at the end of all surveys to get general comments.

- Add a “rate instructor on a scale of 1-5” and use that to generate “stars” for the publish page.

- Include means to NOT show dept and campus statistics on an individual’s summary page.

- Add links on site to research articles re: response rates and online surveys

- Add link to blog from main site.

- Make sure an individual can use the site even if their organization is not using it.

Big changes from past version:

- Changed handling of cross-listed courses, again.

- Removed instructor ability to create new questions and to order those questions.

- Removed staff ability to reorder department questions